Contact Dr. Cang Ye

Phone:(804) 828-0346

Google Voice: (501) 237-1818

Fax: (804) 828-2771

Email: cye@vcu.edu

Laboratory

Dept. of Computer Science

Virginia Commonwealth University

401 West Main Street, E2264

Richmond, VA 23284-3019

Office

Dept. of Computer Science

Virginia Commonwealth University

401 West Main Street, E2240

Richmond, VA 23284-3019

Robotics Laboratory

Department of Computer Science, College of Engineering

Virginia Commonwealth University

© 2016 Dr. Ye's LAB. ALL RIGHTS RESERVED.

Mobile robot terrain mapping with 2-D laser rangefinder

Mapping with the Gorilla robot

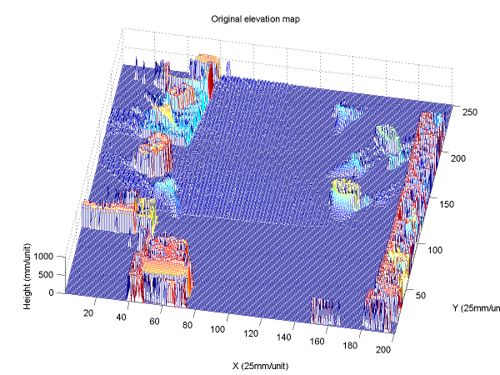

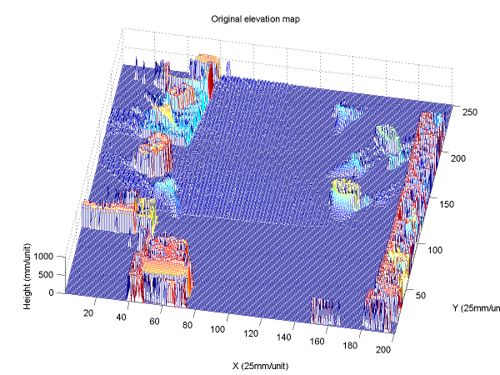

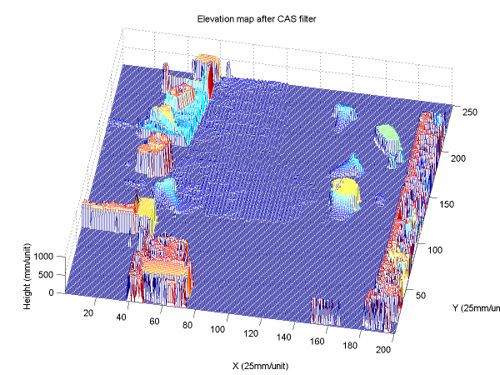

Elevation map built by our terrain mapping method without the proposed filter

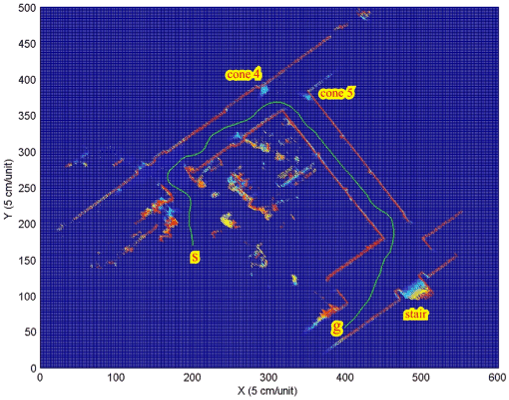

Mapping with the Segway Robotic Mobility Platform (Segway RMP)

Autonomous navigation of mobile robots on rugged terrain (i.e., indoor environments with debris on the floor or outdoor, off-road environments) requires the capability to decide whether an obstacle should be traversed or circumnavigated. The ability to make this decision and to actually execute it is called “obstacle negotiation” (ON). A crucial issue involved in ON is terrain mapping. Research efforts on terrain mapping have been devoted to indoor environments outdoor, off-road terrain , as well as planetary terrain. Most of the existing methods employ stereovision, which is sensitive to environmental condition (e.g., ambient illumination) and has low range resolution and accuracy. As an alternative or supplement, 3-D Laser Rangefinders (LRFs) have been employed since the early nineties . However, 3-D LRFs are very costly, bulky, and heavy. Therefore, they are not suitable for small and/or expendable robots. Furthermore, most of them are designed for stationary use due to the slow scan speed in the elevation.

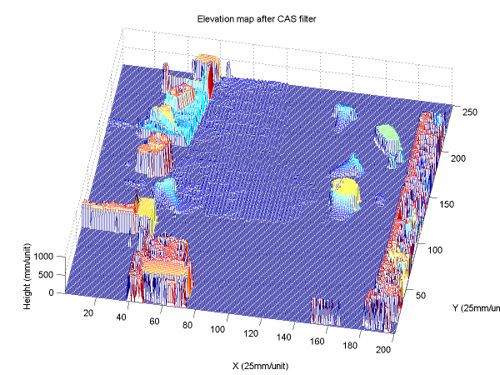

A more feasible solution for lower-cost robots is a 2-D LRF. However, the existing works use a 2-D LRF as safety protection (e.g., collision warning) or as auxiliary sensor to stereo vision. In this research, we propose a new terrain mapping method, so call "in-motion terrain mapping" for a mobile robot using 2-D LRF. A Sick LMS 200 (2-D LRF) is mounted on the mobile robot in such a way that the LRF looks forward and downward. When the robot is in motion, the fanning laser beam sweeps the ground ahead of the robot and produced continuous range data on the terrain. These range data are then transform into world coordinate using the pose data from a 6 DOF Proprioceptive Pose Estimation system and hence result in a terrain elevation map. The laser range data are usually corrupted by mixed pixels, missing data, random noise and artifacts which result in map misrepresentation. To deal with these problems, we propose an innovative filtering algorithm which is able to remove the corrupted pixels only and fill the missing data. Our extensive indoor and outdoor mapping experiments demonstrate that the proposed filter has better performance in erroneous data reduction and map detail preservation than conventional filters.

Elevation map built by our terrain mapping method with the novel filter

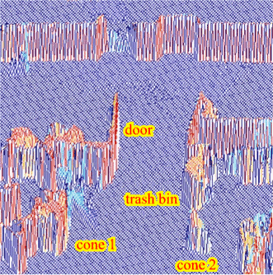

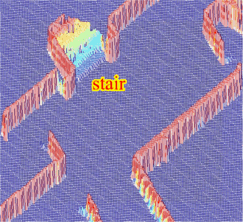

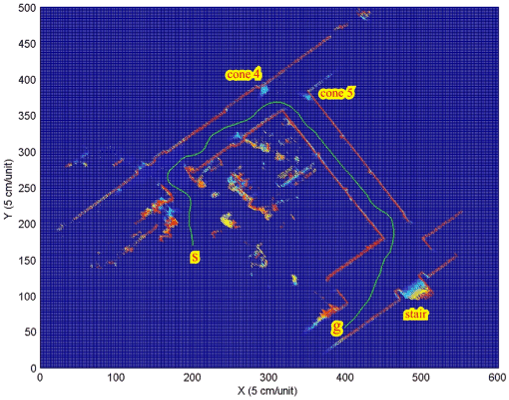

(a) Top view of the entire map (ATL building basement) built by the mapping system

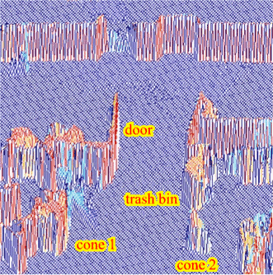

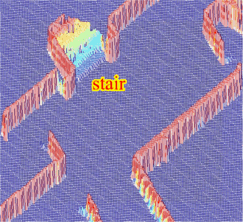

(b) Correspondence between scenes and maps